So I trained a stable diffusion model using my art

Is this my external imagination or the internets?

I wrote about ai art generators here before as “external imaginations” and cautioned the reader about using them in place of their own imagination.

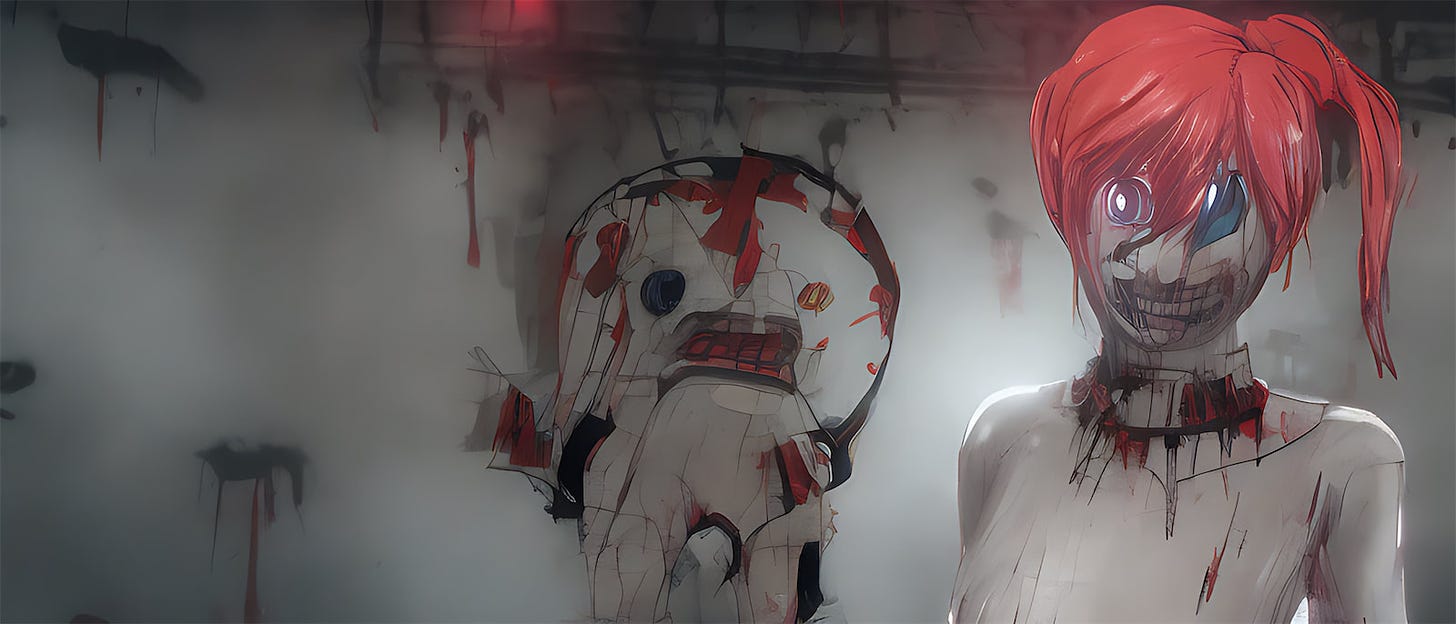

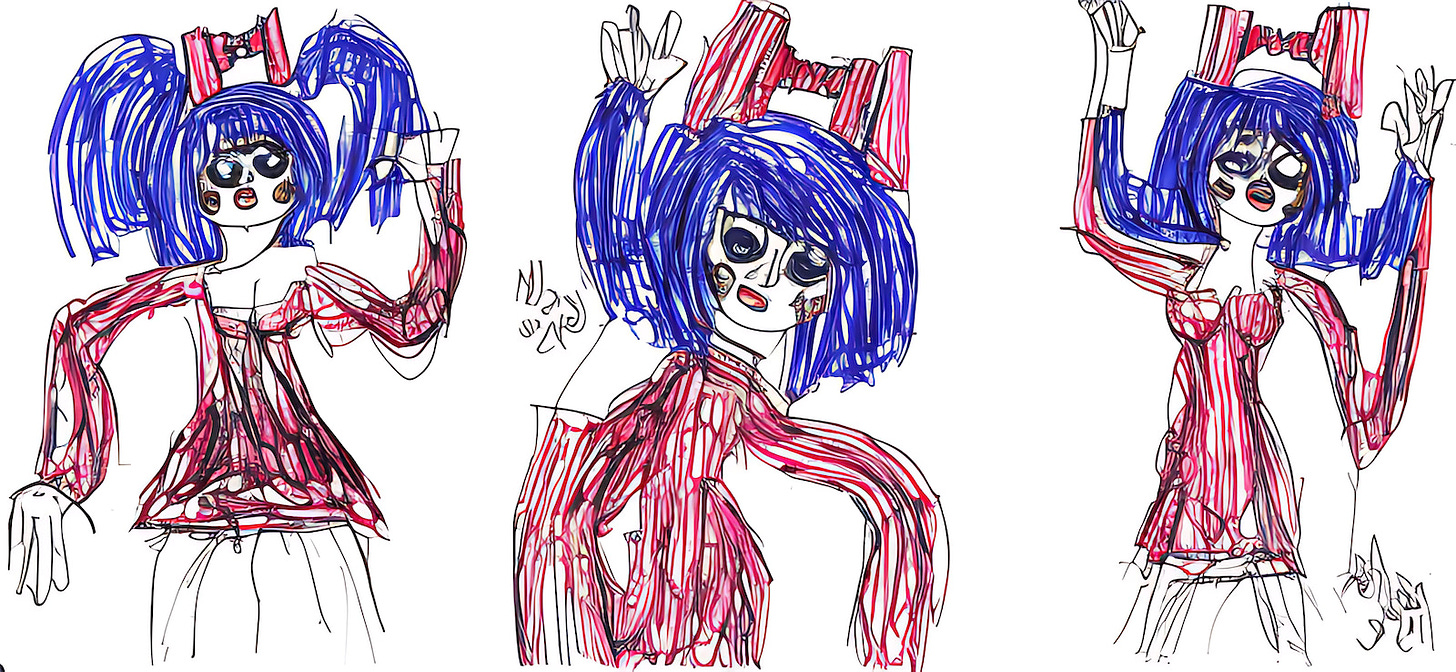

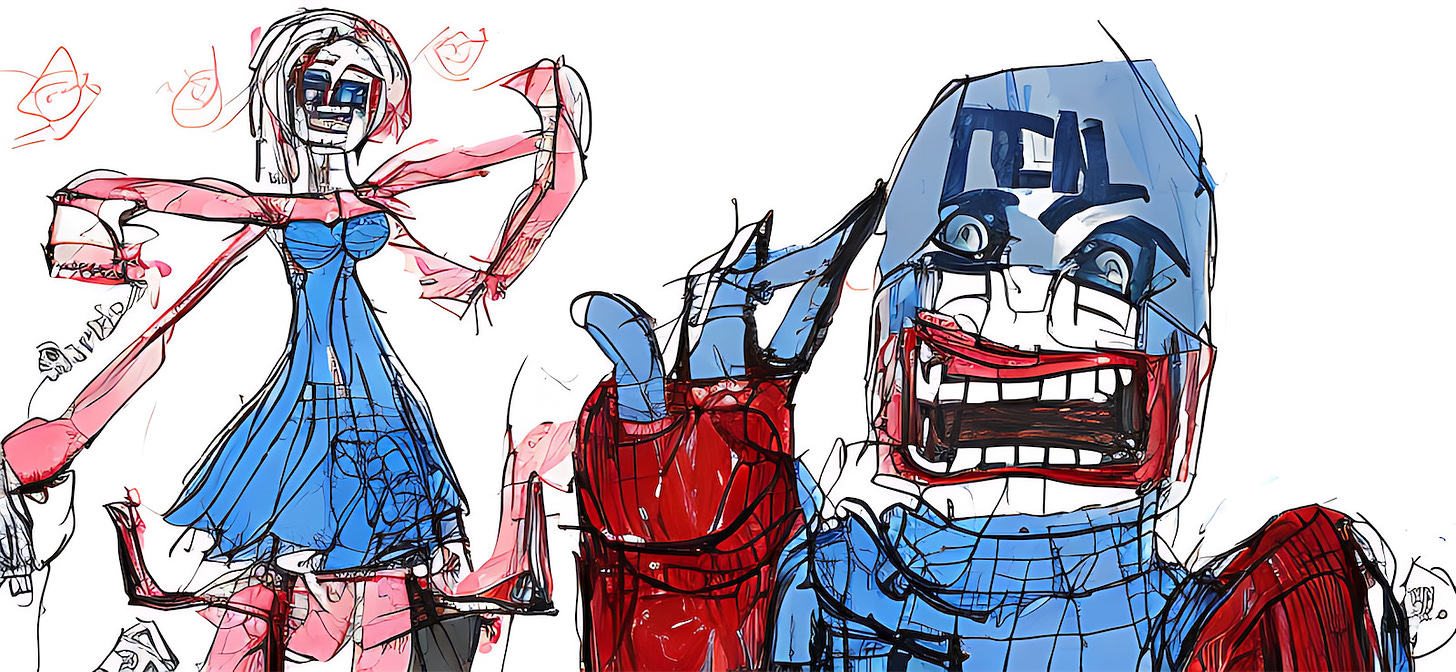

This past week I started experimenting with training my own stable diffusion model based on my own art.

It spits out interesting image after interesting image- I generated hundreds of them- this week it was my favorite show to watch—> as it generates a new image every 6 seconds or so and I really liked a lot of them-

While doing this it got me thinking about all sorts of things- what does this mean to me as an original artist?

Is this like having an assistant trained in my style that can draw better than me? If so, that’s pretty nice.

Am I making myself redundant as an original artist by creating this model? Or only if I release the model publicly?

What does the model leverage the most? My visual style, the character styles, faces? Everything?

If my model exists am I still required to make “my” art?

What is the future of these generators?

Some ideas-

Eventually they will run in realtime- I see them eventually being used like post effect filters/shaders applied in realtime-

For example with a “film”- a human or ai will generate a video/image sequence of a generic looking film(an animated storyboard essentially, no need to render any sophisticated visuals as the diffusion model will provide that in realtime)- then when it is viewed by a human they can choose what diffusion “filter” to view it with-

Do you want it in the style of a David Lynch film? Do you want all the characters to be furries? Do you want all the characters to be naked Instagram influencers? Do you want it in the style of Van Gogh- Do you want every actor to be Nicolas Cage?

This obviously will push more art and entertainment into the realtime/game engine space-

If you played my M doll interactive and played with changing the visual post fx in realtime or tweaked the color correction in realtime imagine something like that but you’ll be using different diffusion models as realtime filters-

So it’s definitely been thought provoking to train these models and look at what it generates-

Here are some of my thoughts after this week-

Since ANYONE can create a model from anyone’s art/images I think it would be smart for future artists to keep what we’ll call their “source art” SECRET/PRIVATE as once its online or shared with anyone- that will allow anyone else to create a model and generate new “art” with that style etc-

If your style is captured by a model why are you needed? So protect your source images and only release images created from your model- its going to be like protecting your source code- you can always go open source as well if you choose.

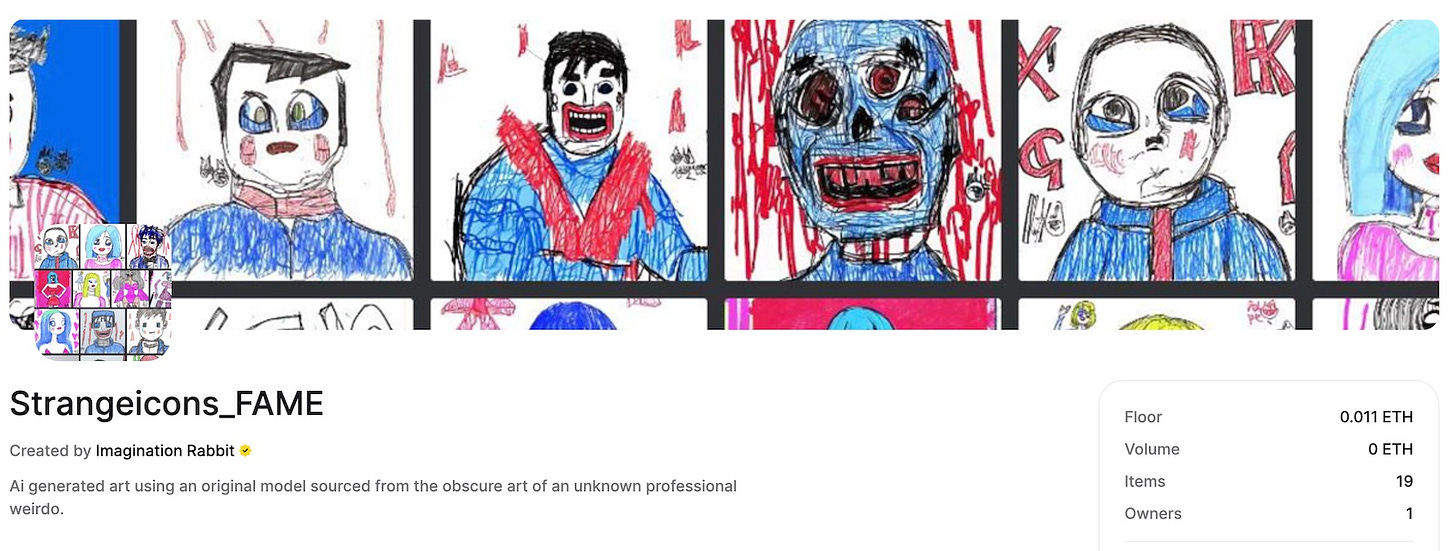

It seems natural to me that this generated art will be monetized leveraging Nft tech- so you’d use your model to generate images which you would then sell as Nft’s- you could even generate the art + nft from within a realtime application/dapp- maybe this is how Nft mints will be handled in the future?

For fun I used my model to create an Nft collection of FAMEous people- you can find it here- I would never draw famous people etc myself but hey my model can do it for me haha

More thoughts on the Nft stuff…

The art doesn’t exist yet- you log onto an app/launch an application- then pay the mint fee and generate a procedural image using a custom model trained/created by an original artist- its a new original piece owned by you with the artists commission fee baked into the Nft etc

Since its now so easy to create interesting original art- is it original if stable diffusion generates it with my original model? I don’t know- but lets call it original art anyway-

So I can now generate thousands of interesting original images every day- what am I going to do with them? Why make them? They have to have some purpose?

What do these images mean? I didn’t draw/paint them so are they just interesting looking emptiness? Or since it was trained with my art do they retain the “meaning” I put into my original art used to train the model?

For me an ideal scenario with this tech- would be to be able to use my model as a realtime post effect etc for my films/games/animations/interactives but we’re not there yet technologically- it takes my 4090 between 3 - 10 seconds to generate an image- we need more a little more power to generate 24fps- could probably do that with cloud gpu’s rn tho-

Ideally I could load this model into my Nightmare Puppeteer animation creator and you can use it to make those animations look like the above images or use any model you want to change the look-

So it’s been an interesting week playing with this stuff- but yeah- I imagine the future of custom diffusion models and art is going to be involved with Nft’s and realtime applications/games etc

It might also involve ai generated artists creating ai generated art and speech like Dhea Rhea

I leave you with this “Pitch” I made to Pixar using images generated with my model using the prompt “Pixar” haha

I love the idea of "secret" or "occult" art, to draw a line between one's creative private and public life.

As an artist myself I'm still negotiating how AI will serve me. Because the physical senses are like a cigarette, they act as a filter between the imagination and reality. AI is only as useful as the physical data it's fed. But creativity is an immeasurable playing field that we still don't have all the answers to.

What keeps me sober is knowing that whether one is original or not, the world will still not care because people are comfortable with the familiar and afraid of the new. Whatever originality exists out there or within us, The Matrix won't take it kindly.

But for now, the role that AI will serve for me is ideating abstract concepts and doing color and value work for my ink drawings.